When troubleshooting network degradation or outage, measuring network performance is critical to determining when the network is slow and what is the root cause (e.g., saturation, bandwidth outage, misconfiguration, network device defect, etc.).

Whatever the approach you take to the problem (traffic capture with network analyzers like Wireshark, SNMP polling with tools such as PRTG or Cacti or generating traffic and active testing with tools such as SmokePing or simple ping or trace route to track network response times), you need indicators: these are usually called metrics and are aimed at producing tangible figures when measuring network performance.

This article explains 3 major indicators for measuring network performance (i.e., latency, throughput and packet loss) and how they interact with each other in TCP and UDP traffic streams.

- Latency is the time required to transmit a packet across a network:

- Latency may be measured in many different ways: round trip, one way, etc.

- Latency may be impacted by any element in the chain which is used to transmit data: workstation, WAN links, routers, local area network (LAN), server,… and ultimately it may be limited, in the case of very large networks, by the speed of light.

- Throughput is defined as the quantity of data being sent/received by unit of time

- Packet loss reflects the number of packets lost per 100 packets sent by a host

This can help you understand the mechanisms of network slowdowns.

Measuring Network Performance: UDP

UDP Throughput is Not Impacted by Latency

UDP is a protocol used to carry data over IP networks. One of the principles of UDP is that we assume that all packets sent are received by the other party (or such kind of controls is executed at a different layer, for example by the application itself).

In theory or for some specific protocols (where no control is undertaken at a different layer; e.g., one-way transmissions), the rate at which packets can be sent by the sender is not impacted by the time required to deliver the packets to the other party (= latency). Whatever that time is, the sender will send a given number of packets per second, which depends on other factors (application, operating system, resources, …).

Measuring Network performance: TCP

TCP is directly impacted by latency

TCP is a more complex protocol as it integrates a mechanism which checks that all packets are correctly delivered. This mechanism is called acknowledgment: it consists of having the receiver transmit a specific packet or flag to the sender to confirm the proper reception of a packet.

TCP Congestion Window

For efficiency purposes, not all packets will be acknowledged one by one; the sender does not wait for each acknowledgment before sending new packets. Indeed, the number of packets that may be sent before receiving the corresponding acknowledgement packet is managed by a value called TCP congestion window.

How the TCP congestion window impacts throughput

If we make the hypothesis that no packet gets lost; the sender will send the first quota of packets (corresponding to the TCP congestion window) and when it will receive the acknowledgment packet, it will increase the TCP congestion window; progressively the number of packets that can be sent in a given period of time will increase (throughput). The delay before acknowledgment packets are received (= latency) will have an impact on how fast the TCP congestion window increases (hence the throughput).

When latency is high, it means that the sender spends more time idle (not sending any new packets), which reduces how fast throughput grows. (See our series of articles on TCP.)

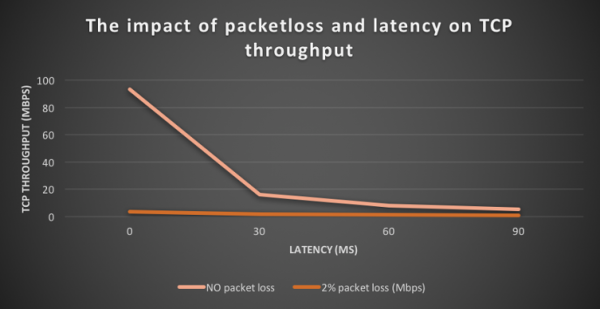

The test values are very explicit:

| Round trip latency | TCP throughput |

| 0ms | 93.5 Mbps |

| 30ms | 16.2 Mbps |

| 60ms | 8.07 Mbps |

| 90ms | 5.32 Mbps |

TCP is Impacted by Retransmission and Packet Loss

How TCP Congestion Handles Missing Acknowledgment Packets

The TCP congestion window mechanism deals with missing acknowledgment packets as follows: if an acknowledgement packet is missing after a period of time, the packet is considered as lost and the TCP congestion window is reduced by half (hence the throughput too – which corresponds to the perception of limited capacity on the route by the sender); the TCP congestion window size can then restart increasing if acknowledgment packets are received properly.

Packet loss will have two effects on the speed of transmission of data:

- Packets will need to be retransmitted (even if only the acknowledgment packet got lost and the packets got delivered)

- The TCP congestion window size will not permit an optimal throughput

With 2% packet loss, TCP throughput is between 6 and 25 times lower than with no packet loss.

| Round trip latency | TCP throughput with no packet loss Round trip latency | TCP throughput with 2% packet loss |

| 0 ms | 93.5 Mbps | 3.72 Mbps |

| 30 ms | 16.2 Mbps | 1.63 Mbps |

| 60 ms | 8.7 Mbps | 1.33 Mbps |

| 90 ms | 5.32 Mbps | 0.85 Mbps |

This will apply irrespective of the reason for losing acknowledgment packets (i.e., genuine congestion, server issue, packet shaping, etc.)

Improved delivery, better visibility: How Accedian and VMware are working together to help CSPs navigate the 5G world

Improved delivery, better visibility: How Accedian and VMware are working together to help CSPs navigate the 5G world

Adding a new dimension of visibility to the Cisco Full-Stack Observability portfolio with Accedian Skylight

Adding a new dimension of visibility to the Cisco Full-Stack Observability portfolio with Accedian Skylight