Diving deeper into multiplexing and server push

In the first part of this series, we summarized the main technical challenges faced by the historical HTTP protocol version 1.X that led the Internet Engineering Task Force (IETF) to ratify the HTTP/2 protocol under RFC 7540 back in 2015.

We discussed the main technical improvements in terms of the way data is being transferred from the server to the client, thanks to a new binary framing layer and header compression, as well as the way communication is between a client and a server is handled via techniques like “multiplexing” and “server push”.

The conclusion could have been: “Go ahead and adopt HTTP/2 everywhere!”

Well, let’s see if this is the case by considering two HTTP/2 features: multiplexing and server push. The main point here is not to go into all technical details of these concepts that can become quite complex, but to provide the basics that illustrate that the best is sometimes the enemy of “good enough” in networking.

Multiplexing to cut delay… maybe

Let’s face it: we have not found a way to exceed the speed of light yet and transmitting data over networks is still dictated by its related physical law.

Sending data from New York to Sydney will take about 50ms. Taking additional delay introduced by intermediate network components into account, this one-way delay will likely be more around 80ms. Considering that a TCP session establishment requires a three-way handshake process, each one would require about 240ms in this case.

You can easily see why finding ways to reduce the number of required round trips can greatly enhance an application’s performance. This is particularly true for interactive web applications.

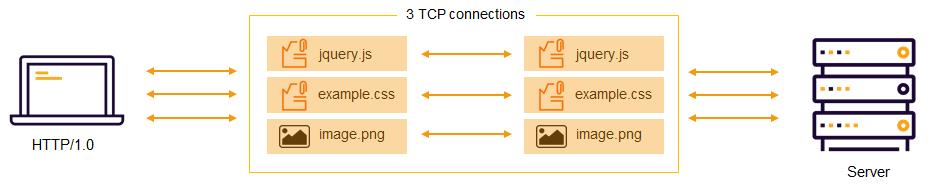

With the older HTTP/1.0 protocol, each query/response requires a dedicated TCP connection. In addition, a browser can typically support a maximum of six simultaneous TCP connections per domain.

In the above example, transmitting one JavaScript, one StyleSheet and one image requires three TCP connections in HTTP/1.0. This not only implies additional round trips to set up the different TCP connections, but it also consumes more network resources for handling these sessions.

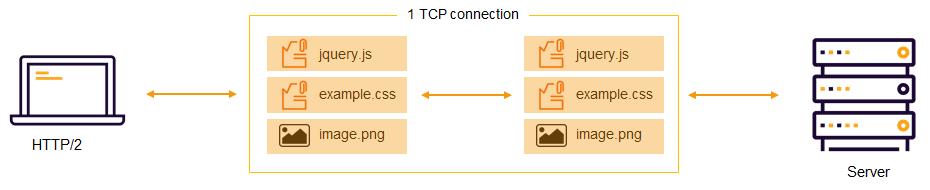

HTTP/2 solves this problem by supporting the multiplexing technique.

Instead of using one dedicated TCP session per requested element, this allows the browser to query all elements within the same TCP connection:

Considering this example, using HTTP/2 will save you 2 TCP session establishments for a total of 480 ms in the case of this example’s transmissions between New York and Sydney. This is a huge result!

Indeed … in most of the cases…

Let’s assume that the network experiences congestion. In this case, since multiple web transactions are transmitted over the same TCP connection, they are all equally affected by this degradation (packet loss and retransmission), even if the lost data only concerns a single request!

So here you see how great techniques that should improve performance can in some cases make the situation worse.

Server push proactively sends additional resources… but are they already in the cache? Doh!

Resource loading in the browser is hard! As we’ve seen previously, performance is tightly coupled to network latency.

Furthermore, the event of execution is extremely important. For example, loading a JavaScript blocks the rendering of a webpage (the HTML parsing process is stopped until the JavaScript is loaded). This is also the case for CSS (Cascading Style Sheets) resources.

So two of the major questions in web application performance optimization are: “How can we load resources more efficiently?” and “What are the most important resources to load first?”

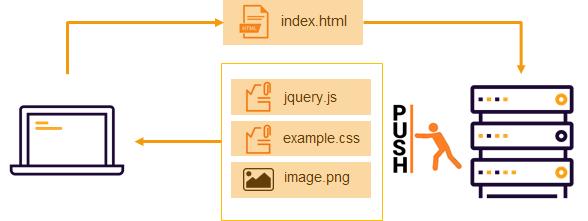

In HTTP/2, a server can anticipate the fact that the browser will need additional resources later by proactively sending them without waiting for additional browser’s requests. This is called a “Server Push”.

Anticipating the client’s needs seem to be a great idea, right?

Well, in many circumstances, it is indeed a very efficient way to improve performance by reducing the number of requests and sending needed data earlier.

But, if the browser already has the data in its cache, is it a good idea to send them anyway? Of course not! It would unnecessarily consume network and systems resources.

The main problem here is that the server does not have any knowledge of the client cache state. Among other workarounds, one solution to this problem is called “Cache Digest”. A digest is sent by the browser to inform the server about all resources that it has available in its cache so that the server knows it does not need to send them.

Unfortunately, this kind of technique is still subject to browsers inconsistencies and lack of server support.

In conclusion

Web technologies continuously evolve to offer the best user experience possible. HTTP/2 brings major improvements compared to the previous HTTP/1.X standard, and new protocols like HTTP/3 (previously called QUIC – Quick UDP Internet Connection) will definitely play an important role in web development in the future.

Nevertheless, as we’ve seen in this article, the devil is often hidden in the details. Great capabilities can sometimes lead to unexpected results. The efficiency of the techniques described here are dependent on external factors like network conditions and browser or server support.

Having a monitoring solution that guarantees full end-to-end visibility in your arsenal is a key success factor in any web platform optimization project (browser identification, network performances, transactions details).

You can also see how complete visibility into your SaaS and cloud services can make IT life just a little easier.

Improved delivery, better visibility: How Accedian and VMware are working together to help CSPs navigate the 5G world

Improved delivery, better visibility: How Accedian and VMware are working together to help CSPs navigate the 5G world

Adding a new dimension of visibility to the Cisco Full-Stack Observability portfolio with Accedian Skylight

Adding a new dimension of visibility to the Cisco Full-Stack Observability portfolio with Accedian Skylight